Ubuntu 23.10 uses Gnome Shell as its default, and it makes some of the terminal settings unintuitive to change.

This post will show how to override settings for Ubuntu to your preferred terminal for a cohesive experience.

In this example we will use blackbox-terminal.

First, let’s install Blackbox.

sudo apt install blackbox-terminalOr

flatpak install flathub com.raggesilver.BlackBoxThe first setting we’ll change is the default Terminal shortcut, Ctrl + Alt + T

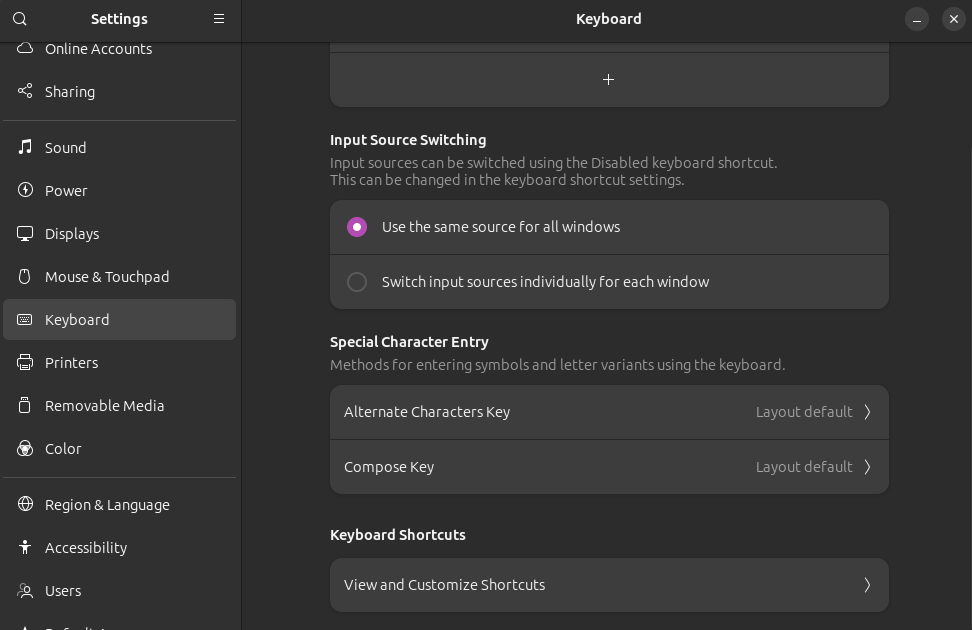

Visit the settings, and navigate to Keyboard Shortcuts.

Click “View and Customize Shortcuts”, then scroll to “Custom Shortcuts”.

Name: blackbox | Command: /usr/bin/blackbox-terminal | Shortcut: Ctrl + Alt + T

Replace the other shortcut when prompted.

Run the below command to select an alternate default terminal.

sudo update-alternatives --config x-terminal-emulatorChoose the number that lists your terminal, in this case, Blackbox.

Gnome hardcodes some cases to use gnome-terminal. The easiest way to modify this behavior is by symlinking the blackbox terminal binary to where it expects gnome-terminal.

Backup the real one.

mv /bin/gnome-terminal /bin/gnome-terminal.bak

mv /bin/gnome-terminal.real /bin/gnome-terminal.real.bakThen link your desired terminal instead.

ln -s /bin/blackbox-terminal /bin/gnome-terminal

ln -s /bin/blackbox-terminal /bin/gnome-terminal.realWe will also change our right click menu options to use Blackbox.

Let’s uninstall the package responsible for adding the Terminal entry to our right click menu in nautilus. We will replace it with our own functionality.

sudo apt remove nautilus-extension-gnome-terminalNext, verify the package python3-nautilus is installed. This is an up to date version of python-nautilus.

sudo apt install python3-nautilusThe package will allow us to write custom Python extensions for the file explorer.

Navigate to ‘~/.local/share/nautilus-python/extensions‘ (You may have to create it)

Create a file named ‘anythingyouwant.py‘ (ex: open-terminal.py), and add the below python.

import os

from urllib.parse import unquote

from gi.repository import Nautilus, GObject

from typing import List

import subprocess

class OpenTerminalExtension(GObject.GObject, Nautilus.MenuProvider):

def _open_terminal(self, file: Nautilus.FileInfo) -> None:

filename = unquote(file.get_uri()[7:])

subprocess.Popen(["blackbox-terminal", "--working-directory=" + filename])

def menu_activate_cb(

self,

menu: Nautilus.MenuItem,

file: Nautilus.FileInfo,

) -> None:

self._open_terminal(file)

def menu_background_activate_cb(

self,

menu: Nautilus.MenuItem,

file: Nautilus.FileInfo,

) -> None:

self._open_terminal(file)

def get_file_items(

self,

files: List[Nautilus.FileInfo],

) -> List[Nautilus.MenuItem]:

if len(files) != 1:

return []

file = files[0]

if not file.is_directory() or file.get_uri_scheme() != "file":

return []

item = Nautilus.MenuItem(

name="NautilusPython::openterminal_file_item",

label="Open in Terminal",

tip="Open Terminal In %s" % file.get_name(),

)

item.connect("activate", self.menu_activate_cb, file)

return [

item,

]

def get_background_items(

self,

current_folder: Nautilus.FileInfo,

) -> List[Nautilus.MenuItem]:

item = Nautilus.MenuItem(

name="NautilusPython::openterminal_file_item2",

label="Open in Terminal",

tip="Open Terminal In %s" % current_folder.get_name(),

)

item.connect("activate", self.menu_background_activate_cb, current_folder)

return [

item,

]

You may even customize the label variable under both get_background_items and get_file_items. I usually prefer my entry to simply read “Terminal”.

To see any changes, run nautilus -q to restart the process.

This is great, but right clicking on the Desktop and choosing Open in Terminal there will, oddly, still open gnome-terminal. An extension controls this behavior, so we will have to edit that as well.

Open ‘/usr/share/gnome-shell/extensions/ding@rastersoft.com/app/desktopIconsUtil.js‘

Edit the ‘launchTerminal‘ function to instead spawn your preferred terminal.

function launchTerminal(workdir, command) {

let argv = ['blackbox-terminal', `--working-directory=${workdir}`];

if (command) {

argv.push('-e');

argv.push(command);

}

trySpawn(workdir, argv, null);

}You can also edit the label in both ‘fileItemMenu.js:358‘ and ‘desktopManager.js:1082‘

Now regardless of how the terminal is launched, blackbox-terminal should be called instead!

It helps me if you share this post

Published 2024-05-21 06:00:00